How to understand AI models, learning logic, and real-world limitations

Jan 23, 2026

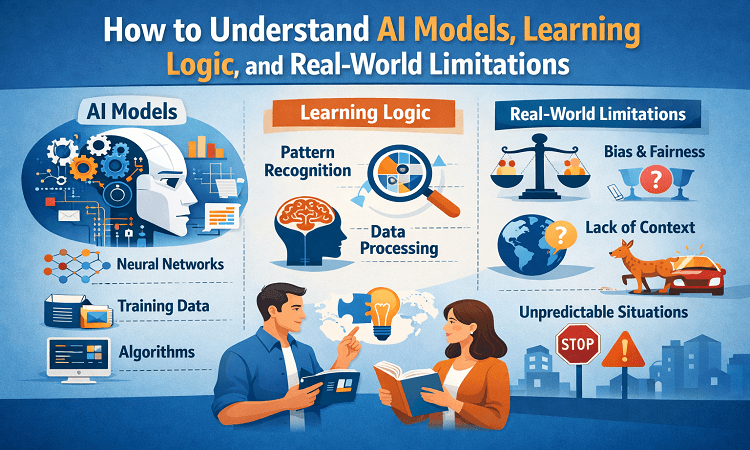

Today, many people rely heavily on artificial intelligence, as Artificial intelligence often looks impressive from the outside, and generate content in seconds. But once you start working with AI closely, you realize that it is not magic, every model follows a specific learning logic and operates within very real limits.

Learners who begin with an AI Course in Noida usually start by training models and checking accuracy scores. As experience grows, they understand something more important. They are able to know that where their model lacked learning and failing fail matters more than just building one.

What an AI Model Actually Does?

An AI model does not think or understand in a human way, it learns patterns from data and executes it through patterns. If it has seen similar examples before, it performs well. If not, it struggles.

Different problems require different models. Some are simple and fast, while others are complex and powerful but harder to control.

Common model categories include:

● Simple statistical models for basic prediction.

● Tree-based models for rule-like decisions.

● Neural networks for image, text, and speech tasks.

● Large language models for conversational systems.

During Artificial Intelligence Training in Delhi, learners usually notice that choosing the right model is more important than an advanced one.

How AI Models Learn From Data

AI models learn by making mistakes and correcting them, they compare predictions with actual outcomes and adjust themselves to reduce errors. This process repeats thousands or even millions of times.

There are three main learning styles:

Learning Method | How Learning Happens? | Where It’s Used? |

Supervised | Learns from labeled examples. | Predictions, classification. |

Unsupervised | Finds patterns on its own. | Grouping, clustering. |

Reinforcement | Learns through rewards. | Control systems, agents. |

While this sounds technical, the idea behind it is simple, where models learn only from what they are shown. Nothing more than whatever is stated in this segment.

Why Data Quality Matters More Than Algorithms?

A model is only as good as the data behind it. Poor data creates poor results, no matter how advanced the model is.

Common data issues include:

● Incomplete records.

● Biased historical data.

● Outdated information.

● Missing edge cases.

Learners in an AI Course in Gurgaon often discover that improving data quality leads to better results. This is a hard lesson, but an important one, as changing data doesn’t lead to a great outcome.

Accuracy Does Not Equal Reliability

High accuracy looks good on paper, but real systems behave differently. A model can score well during testing and still fail in real usage.

This usually happens because:

● Real-world data is unpredictable.

● User behavior changes over time.

● Rare cases were ignored during training.

● The environment is different from test conditions.

That is why strong AI systems focus on stability, not just performance.

Overfitting, Underfitting, and Balance

There are certain ways by which a model can fail, so we have listed certain two ways.

Models fail in two common ways.

● Overfitting happens when a model memorizes training data.

● Underfitting happens when the model is too simple.

Both lead to poor results in real usage, finding balance requires testing, and realistic expectations.

Real-World Constraints AI Cannot Ignore

AI systems face limits that are often ignored in theory.

Some common constraints include:

● Limited processing power.

● Slow response times.

● Security and privacy rules.

● Explainability requirements.

● System integration challenges.

A model that cannot explain its decisions or respond fast enough may never be used, even if it performs well.

Why Explainability Builds Trust?

In real businesses, AI decisions affect people, where loans, and healthcare all require explanations. Stakeholders want to know why a decision was made, not just the result.

Simple models are easier to explain, audit, and defend, a complex model is harder to justify when questions arise. Professionals must choose based on impact, and risk, not trends.

Trust grows when decisions are transparent, and easy to communicate.

Deployment Is Where Problems Appear

Building a model is only the first step. Problems often start after deployment.

Teams must watch for:

● Performance drop over time.

● Data changes.

● Unexpected inputs.

● Silent failures.

This is why AI systems need monitoring and regular updates.

Why These Concepts Matter for Careers?

Understanding learning logic and constraints separates beginners from professionals.

People who understand these basics:

● Build safer systems.

● Set realistic expectations.

● Communicate better with stakeholder.

● Avoid costly failures.

AI is not about showing intelligence; it is about building systems that behave predictably.

Conclusion

AI models learn patterns, not meaning, their behavior depends on data, and real-world limits. When these limits are ignored, systems fail quietly causing damage to overall plan and execution.

By understanding how models learn and where they break, professionals move beyond surface knowledge. They start building AI systems that work not just in demos, but in real environments. That is where real AI skill begins, start your investment from the above mentioned courses that will shape your career ahead.